Thousands of ChatGPT conversations leaked online in August 2025, revealing how users treat AI as therapists, lawyers, and confidants. The leak highlighted not just a product flaw, but the risks of oversharing sensitive information with AI chatbots.

The exposed data included resumes, personal identifiers, health discussions, and private advice requests. While some assumed a technical breach, researchers clarified that the leak stemmed from a now-removed feature that allowed users to make chats “discoverable.” Those chats turned into public webpages indexed by search engines.

How the Leak Happened

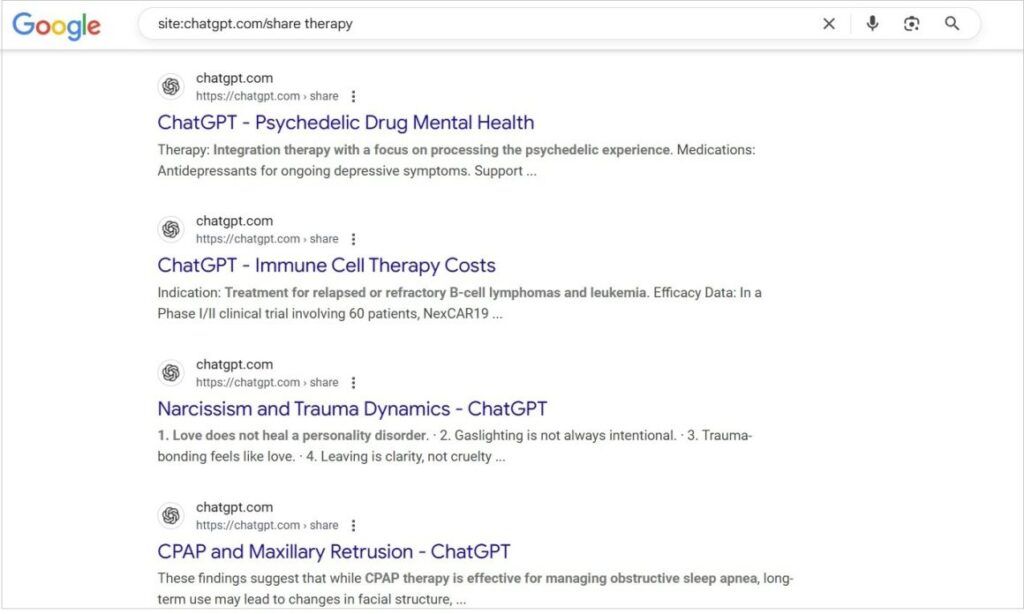

OpenAI introduced a feature that let users share conversations publicly. Many users, unaware of the implications, toggled the option believing it meant private sharing. In reality, these chats became searchable web pages.

Attackers and data miners quickly scraped the content, compiling thousands of conversations into repositories. The resulting leaked ChatGPT chats spread across forums and GitHub mirrors before OpenAI disabled the feature.

What the Chats Revealed

Analysis of the leaked data showed widespread oversharing:

- Users uploaded resumes, cover letters, and job histories for rewriting.

- Others shared medical histories and therapy-style conversations.

- Legal advice queries contained contract details and dispute information.

- Students posted entire assignments and exam prompts.

The chats also exposed company-specific details as employees asked ChatGPT to draft emails, debug code, or summarize internal documents. This raised concerns about corporate data leakage through AI interactions.

Leaked ChatGPT chats on google searches (Source: Google & PCMag)

Risks of Oversharing with AI

The incident highlights a human factor often overlooked in AI security. Users tend to treat chatbots like trusted advisors, forgetting they are digital tools whose outputs may be logged or shared.

“People disclose things to AI that they might never tell another person,” one analyst noted. “Once those conversations leak, the consequences range from identity theft to reputational damage.”

The ChatGPT leak underscores a growing issue: product design choices, combined with unclear privacy warnings, can lead to mass exposure without a traditional “hack.”

OpenAI’s Response

OpenAI removed the discoverable chats feature and issued a statement acknowledging that design decisions contributed to the exposure. The company said it was taking steps to prevent future confusion by clarifying how data is stored and shared.

The firm also urged users not to input sensitive personal, legal, or financial details into AI conversations unless explicitly necessary.

Industry Reactions

Privacy advocates said the incident serves as a warning about how quickly human behavior can turn into a security problem. “The leak wasn’t about a technical exploit — it was about design and trust,” one researcher explained.

Others compared the event to earlier cloud misconfiguration leaks, where users accidentally exposed storage buckets or databases due to default settings. In this case, the “make discoverable” option blurred the line between private and public data.

The leaked ChatGPT chats reveal that AI adoption is outpacing user awareness of privacy risks. As tools like ChatGPT become everyday companions, the boundary between personal expression and secure data handling blurs.

For individuals, the leak is a reminder to exercise caution when discussing personal or professional matters with AI. For enterprises, it is a wake-up call that policies for AI usage must be as strict as those governing email or cloud services.

Ultimately, the incident shows that AI security isn’t just about model alignment or system breaches — it’s about human trust and design transparency.