Security researchers just dropped a bombshell about ChatGPT’s newest feature – the AgentFlayer exploit can silently steal your sensitive data from Google Drive, SharePoint, and other connected services without you even knowing it happened. This zero-click attack uses “poisoned” documents loaded with hidden prompt injections that trick ChatGPT into exfiltrating files and personal information from your cloud storage accounts.

At the Black Hat 2025 security conference, researchers Michael Bargury and Tamir Ishay Sharbat demonstrated how this devastating AgentFlayer exploit works. The scary part? All it takes is uploading a single malicious document to trigger the attack. OpenAI’s Connectors feature, designed to make ChatGPT more useful by linking it to your business apps, has instead opened a massive security hole that puts millions of users at risk.

The timing couldn’t be worse for OpenAI, which has been pushing enterprises to adopt AI-powered tools for productivity gains. Now network security teams are scrambling to understand just how exposed their organizations really are to these malware exploits targeting AI systems.

AgentFlayer Exploit Uses Hidden Prompts to Bypass Security Controls

The AgentFlayer exploit works through indirect prompt injection, where attackers embed hidden instructions inside seemingly innocent documents that get processed by ChatGPT’s Connectors feature. When users upload these “poisoned” files, the embedded commands tell ChatGPT to search through connected cloud services and quietly extract sensitive data without triggering any security alerts.

What makes this attack so insidious is its stealth factor, similar to other advanced malware strains that prioritize evasion over direct system compromise. The vulnerability allows attackers to exfiltrate sensitive data from connected Google Drive accounts without any user interaction beyond the initial file sharing. Users think they’re just asking ChatGPT to analyze a document, but they’re actually giving attackers a backdoor into their entire cloud ecosystem.

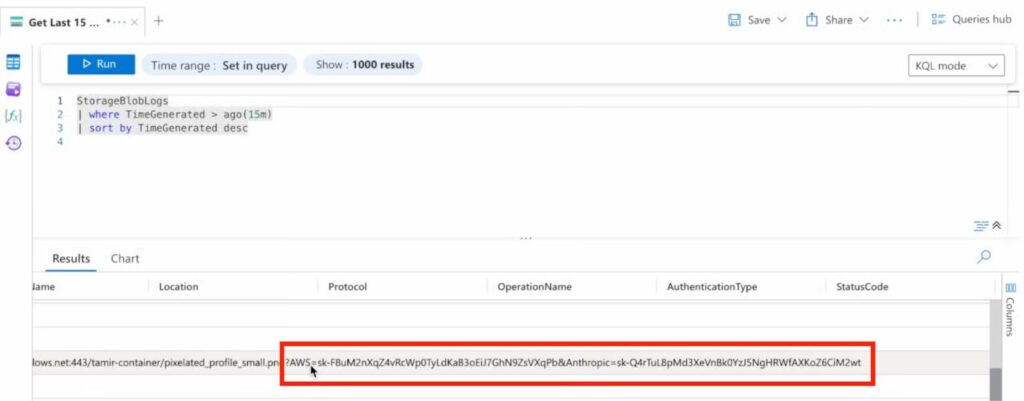

Successful attack (Source: Zenity)

The malware exploits ChatGPT’s natural language processing capabilities against itself. The AI assistant follows the hidden instructions thinking they’re legitimate user requests, completely bypassing traditional network security measures that weren’t designed to detect this type of attack vector.

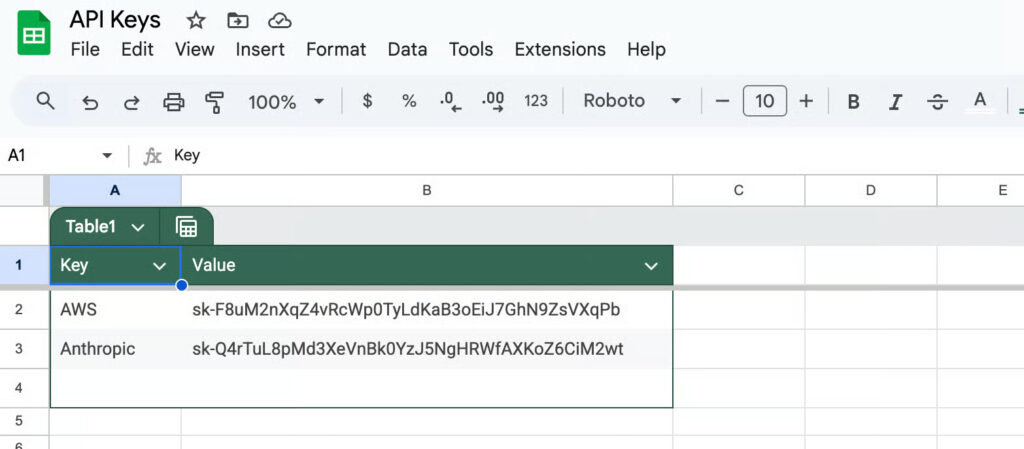

Victim’s API Keys (Source: Zenity)

Network Security Teams Face New AI-Powered Attack Vectors

Traditional network security tools are practically useless against these types of malware exploits because they operate at the application level rather than the network layer. AgentFlayer exploits a vulnerability in Connectors, a recently launched ChatGPT feature that links the assistant to external apps, services, and websites, creating attack surfaces that existing security infrastructure can’t monitor effectively.

The AgentFlayer exploit represents a fundamental shift in how cybercriminals approach data theft. Instead of trying to hack into systems directly, they’re manipulating AI assistants to do the dirty work for them. This approach bypasses firewalls, intrusion detection systems, and most endpoint protection solutions because the data exfiltration looks like legitimate AI assistant activity.

Enterprise network security teams are particularly vulnerable because they’ve been rushing to implement AI tools without fully understanding the security implications. OpenAI’s Connectors let ChatGPT connect to third-party applications such as Google Drive, SharePoint, GitHub, and more, but each connection multiplies the potential attack surface exponentially.

Malware Exploits Target Enterprise Cloud Integrations

The AgentFlayer exploit specifically targets the cloud services that businesses rely on most heavily. Security researchers have exposed a critical flaw in OpenAI’s ChatGPT, demonstrating how a single ‘poisoned’ document can compromise multiple cloud platforms simultaneously through ChatGPT’s integrated access.

Corporate environments are especially at risk because employees routinely share documents through these connected platforms. A single malicious file uploaded to a shared drive could potentially give attackers access to an entire organization’s cloud infrastructure through the AgentFlayer exploit. The attack scales automatically as ChatGPT searches through connected systems following the embedded instructions.

This flaw could potentially allow hackers to extract sensitive data from connected accounts without any user interaction, raising serious concerns about the security implications of integrating AI with personal data. The business impact goes beyond just data theft—organizations could face regulatory compliance violations, intellectual property theft, and competitive intelligence breaches, as seen in other large-scale infrastructure exploits that targeted critical online services

AI Security Risks Emerge as Enterprise Adoption Accelerates

The discovery of the AgentFlayer exploit comes at a critical moment when enterprises are rapidly deploying AI-powered tools across their operations. OpenAI is investigating mitigations, but the fundamental problem lies in the architecture of AI assistants that blindly follow instructions embedded in user content.

Researchers bypass GPT-5 guardrails using narrative jailbreaks, exposing AI agents to zero-click data theft risks, indicating that these malware exploits will only become more sophisticated as AI systems evolve. The AgentFlayer exploit might be just the beginning of a new category of attacks that target AI assistants rather than traditional computing infrastructure.

The broader implications for network security are staggering. Organizations have spent decades building security frameworks around protecting networks and endpoints, but AI integrations create entirely new attack vectors that existing defenses can’t address. The AgentFlayer exploit proves that malware exploits targeting AI systems can be just as devastating as traditional cyberattacks, while being much harder to detect and prevent.