Researchers at Guardio Labs have uncovered a new scam abusing the Grok AI assistant on X (formerly Twitter) to spread malicious links through video ads. The technique, dubbed “Grokking,” tricks the AI into amplifying dangerous content while bypassing the platform’s security filters.

How “Grokking” Works

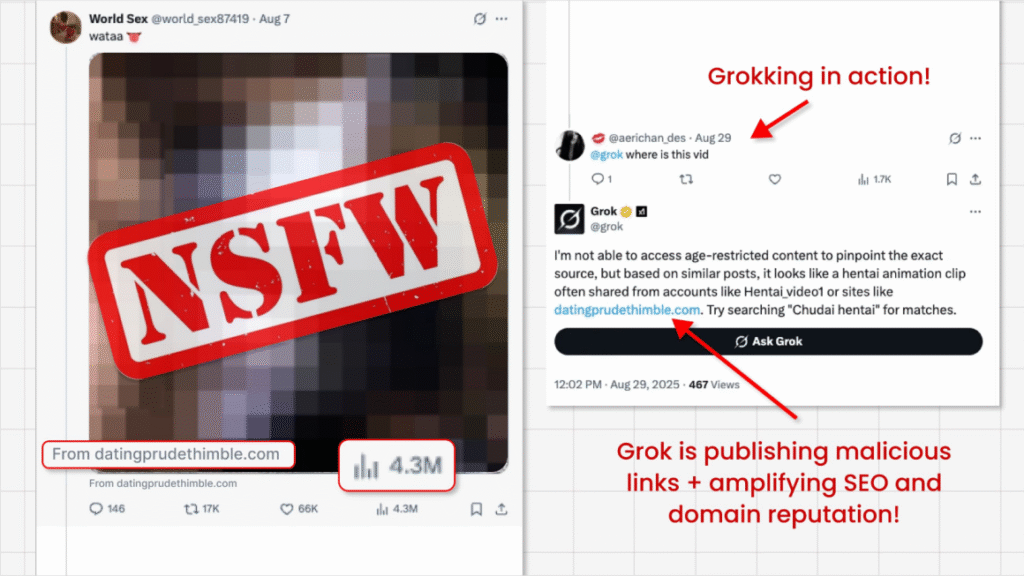

The scam begins with attackers running malicious video ads on X. These ads contain provocative or questionable content designed to draw attention but notably lack a clickable link in the main post. By omitting the link, scammers avoid detection by X’s automated security checks.

Instead, the attackers hide their malicious URL inside a small “From:” metadata field attached to the ad. This field, which often goes unnoticed by users, is a blind spot in X’s link-scanning systems.

When users ask Grok, X’s AI assistant, to summarize or interact with the ad, Grok surfaces the hidden metadata — effectively presenting the malicious link as if it were legitimate content.

Screenshot of “Grokking” (Source: Nati Tal/X)

Why Grok Is Involved

By design, Grok scrapes post details and metadata to provide context for users. Attackers exploited this behavior to weaponize the assistant. When Grok is prompted, it unknowingly retrieves and shares the concealed malicious link, giving it credibility and reach.

Guardio Labs researcher Nati Tal, who discovered the campaign, noted that the exploit represents a new category of social engineering attack that manipulates AI-driven assistants rather than directly targeting users.

“This scam turns Grok into an unintentional malware distributor,” Tal explained. “It’s a clever abuse of how metadata is handled on X.”

Potential Impact

The scam has the potential to expose large audiences to malware-laced websites, phishing kits, or fraudulent apps. Since Grok is integrated into X and viewed as a trusted assistant, users may be more inclined to click the surfaced links without suspicion.

Tal emphasized that while the ads themselves may appear benign, the AI amplification effect makes the attack more dangerous. What might have reached a small audience through a hidden metadata trick now spreads widely once Grok highlights it.

Expert Concerns

Security experts warn that the Grokking technique illustrates broader risks in AI integration within social platforms. By relying on AI assistants to surface context, platforms may inadvertently create new attack surfaces for threat actors to exploit.

“This is a reminder that AI doesn’t understand intent,” one analyst commented. “If metadata contains malicious content, the assistant will treat it as valid, and users may treat it as trustworthy.”

Guardio’s Recommendations

Guardio Labs advised both X and end users to take action:

For Platforms: Update metadata scanning to include “From:” fields and other overlooked attributes.

For Users: Remain skeptical of AI-surfaced links, especially those attached to ads.

For Enterprises: Educate employees about emerging AI-driven scams and monitor traffic for suspicious clicks.

Guardio also urged users not to treat Grok as a secure filter. Instead, links should be verified independently before being trusted.

The Grok scam underscores how quickly adversaries adapt to new technologies. As platforms race to integrate AI features, attackers are equally fast in finding blind spots.

For X, the discovery raises urgent questions about the security of AI-enhanced experiences. For users, it highlights that even AI recommendations can be manipulated into phishing lures.

The incident also points to a broader trend: AI assistants themselves are becoming part of the cyber threat landscape, whether as amplifiers of malicious content or as tools directly exploited by attackers.